Running a vulnerability scan heavily taxes your network, not to mention security teams, to the point where it can impact the operation of a business. In some cases we have even seen scanning causing shutdowns, resulting in millions of lost revenue. Last year we saw another “vulnerability scan remote DoS” issue published, this time for Moxa products.

Despite the deficiencies, many organizations choose scanning as the cornerstone of their vulnerability management program. If you want to assess the security of your network, is vulnerability scanning absolutely essential?

Scanning is Not the Only Way to Uncover Risk

Scanning is not the only way to assess risk within your environment, however it is the most common method. As with CVE/NVD, the penchant for scanning in part stems from its lengthy, twenty-five year history within the security industry. For some organizations, scanning has always been the default approach, and vulnerability scanning has grown into a multi-billion dollar industry which is still going strong today.

Scanning certainly has value and is a helpful tool. However, we argue that vulnerability scanning shouldn’t be the backbone of your security program.

A common data point in this series has been the fact that the most-used data source, CVE/NVD, fails to report over 94,000 public vulnerabilities, and scanners are likely relying on that same source. If your vulnerability program is solely dependent on vulnerability scanning, your organization is likely unaware of 30% – 60% of known risk. This is a significant blind spot and an intelligence gap of this size will have a direct impact on the prioritization, and therefore outcomes, of your risk management program.

What are the Limitations of Traditional Vulnerability Scanning?

It isn’t a secret that most security tools directly source their signatures from CVE/NVD, but did you know that some scanning vendors may actually only supply half of what MITRE provides?

If your vulnerability management program is underpinned on findings from scanning, this is bad news. The 30% delta resulting from CVE’s incomplete dataset already hampers organizations, but if it turns out that your team is actually unaware of nearly 60% of known risk, how can your team be expected to make informed decisions? Considering that there are over 297,000 disclosed vulnerabilities, how many vulnerability signatures does your scanning vendor currently provide?

You will want to calculate your exact intelligence gap, since scanning tools will never report missing vulnerabilities as issues. As such, your vulnerability reports will give you a false sense of security by stating that “there were no issues found”. However, just because their report comes back clean doesn’t mean that those vulnerabilities aren’t there.

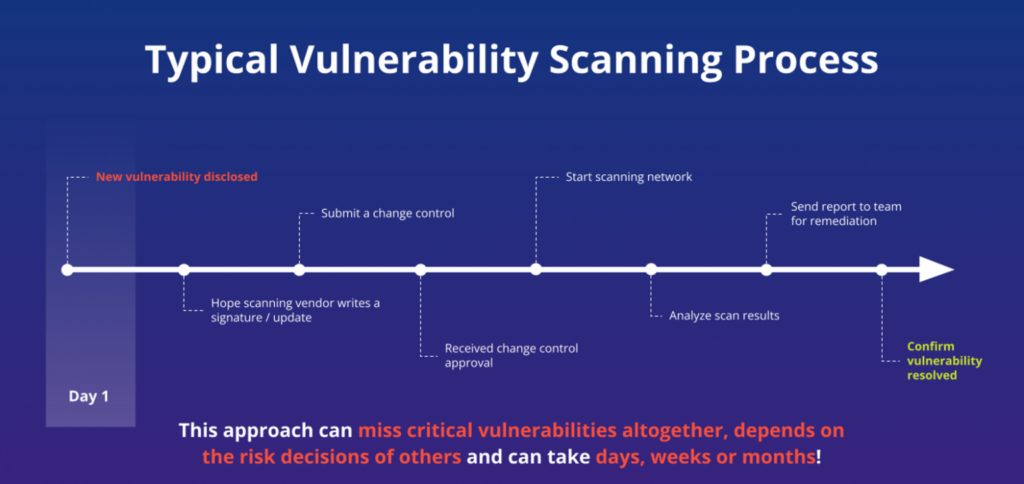

In addition to lacking data, there are two specific aspects unique to scanning that cause it to hinder an organization’s ability to manage risk in a timely manner. Those aspects being:

- The time needed to create signatures

- The resources required to run scans

With Vulnerability Scanning, Risk is Never Shown in Real-Time

Ultimately, these two factors prevent vulnerability prioritization and remediation from occurring in real-time. To qualify, security teams would have the ability to triage vulnerabilities as soon as they are disclosed. But due to the scanning process itself, it often takes a considerable amount of time until organizations are even alerted to issues.

Creating Signatures Takes Too Long

Scanning signatures are often dependent on MITRE creating and opening a CVE ID, and that process alone can take days, weeks or even months. Then, it has to be passed to NVD to enrich that data – typically just enough to make it actionable. Next, the scanning vendor has to digest the information, write a plugin/signature, do QA, push to the feed, and into the hands of the customer. Only then is there a chance for the vulnerability to be identified in a future scan.

Meanwhile, just because the CVE entry is in the process of being created, it doesn’t mean that information like exploitability is unknown. There may be known public exploit/s, and if there are, malicious attackers could be actively using them in the wild. Therefore, time is of the essence.

However, if scanning is your go-to method for vulnerability discovery, you will have to wait until this issue is reported to MITRE, and the plugin/signature lifecycle to begin. Afterwards, you would have to download it, run a slow, disruptive scan, and then triage the findings. Organizations are better off if they can prioritize risk as soon as it is disclosed.

Vulnerability Scans Can Be Expensive

New vulnerabilities are published even while you are running today’s scan. Issues may remain on your network until the next time you scan, regardless of how exploitable they may be. And even if your organization does run a scan on a weekly or monthly basis, it likely is limited to fractions of your network and isn’t representative of actual risk.

How can organizations offset this? By purchasing more scanning tools with the intent to plug network gaps? By running more scans? Depending on how many endpoints your organization has, this may not be an option due to cost. Even if it was within budget, your systems may not be able to handle it. You likely cannot scan your entire network in a reasonable amount of time.

Scanning is notorious for taxing systems and we’ve all heard stories of them taking days, weeks, or even longer to finish. While some may be able to start it and then walk away, depending on your role within the organization, multiple scans could have a major impact on your network performance. Worse, such scans always run the risk of crashing a remote system. Some organizations have learned they simply can’t scan entire subnets with ‘fragile’ technology like IoT, ICS, and SCADA.

Vulnerability Scanning Shows Only a Snapshot of Overall Risk

Putting all these factors together, you start to realize that the risk surfaced by scanning is never what’s affecting you at the present moment. In reality, what you see is the risk that affected you weeks or months ago. You can’t see today’s risk because the vulnerabilities that were just disclosed haven’t even had signatures written for them yet.

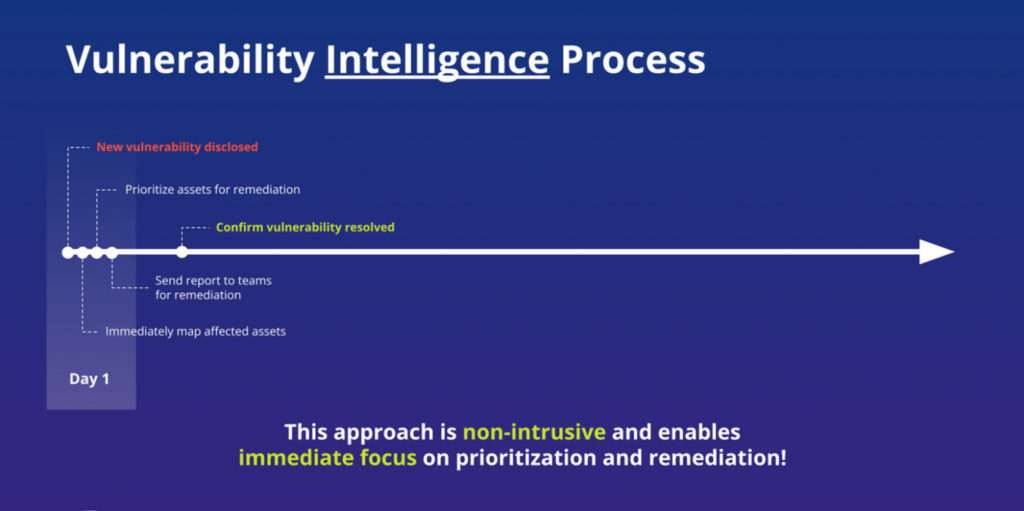

Vulnerability Intelligence Informs Better Risk Decisions

There is a more effective, less disruptive option that allows organizations to surface risk in their environment. That method is relying on comprehensive and detailed vulnerability intelligence that is contextualized to a list of your organization’s assets.

Most organizations are hopefully already familiar with what assets they use. And depending on their tenure, seasoned security professionals likely know which of deployed assets are critical for daily operation. So if you already have this knowledge, why subject yourself to repeated scans to identify assets you already know are there?

Creating an Asset Inventory and SBOM

If a formal list of deployed assets does not exist, it is an enormous undertaking to create one. However, doing so has tremendous benefits. Having an asset inventory enables organizations to perform true risk-based vulnerability management, allowing for better prioritization and manageable remediation.

Organizations can use their Configuration Management Database (CMDB) to facilitate this process. From there, security teams can then identify which are essential for daily operations, which assets house sensitive data, among other things, allowing for vulnerabilities to be mapped to those assets. A Software Bill of Materials (SBOM) will also be important to understand an organization’s risk profile.

Once mapped, organizations can experience the full benefits of timely vulnerability intelligence. Instead of having to wait for CVE or signatures, organizations can get real-time alerts notifying them of any newly disclosed vulnerability affecting their assets. This approach allows for immediate focus on prioritization and remediation.

Remediate Vulnerabilities with Flashpoint

For this approach to work, your vulnerability intelligence has to be comprehensive and detailed. If the vulnerabilities sent to your team are incomplete, then it will no longer be timely since they may have to spend additional hours validating them.

There are over 297,000 known vulnerabilities covering IT, OT, IoT, and third-party libraries and dependencies. Therefore, it is vital that the database you choose to rely on is robust, while capturing all known metadata including, but not limited to exploitability, location, and solution details. Having access to such details will enable you to assess the risk affecting your organization, without the downfalls of scanning. Sign up for a free VulnDB trial today.

Frequently Asked Questions (FAQs)

How can I identify asset risks without running a vulnerability scan?

You can identify asset risks without a scan by using Flashpoint VulnDB, which provides a comprehensive view of the global vulnerability landscape. While scanners look at what you have, Flashpoint intelligence provides critical vulnerability details for issues affecting security tech stacks. Including which software versions are currently being exploited by threat actors. By comparing your internal asset list against Flashpoint’s enriched vulnerability data, you can identify critical gaps and prioritize remediation without the performance impact or network noise of a traditional scan.

| Risk Identification Method | Flashpoint Intelligence Advantage |

| Vulnerability Scanning | Only finds flaws on active, reachable network segments. |

| Flashpoint VulnDB | Identifies risks in dormant systems, IoT, and third-party libraries. |

| Exploit Monitoring | Reveals which vulnerabilities are actively being traded on the dark web. |

How does Flashpoint help prioritize vulnerabilities for remediation?

Flashpoint helps prioritize vulnerabilities by providing the VTEM (Vulnerability Timeline and Exposure Metrics) framework, which tracks the gap between disclosure and exploit availability. Instead of patching every “High” CVSS score, Flashpoint allows teams to focus on flaws that have confirmed exploit code or are trending in illicit communities. This risk-based approach ensures that security teams address the most weaponized threats first, rather than wasting time on flaws that have no real-world path to exploitation.

- Exploitability Analysis: Flags vulnerabilities with publicly available or commercial exploit kits.

- Social Risk Scores: Monitors threat actor interest on forums and encrypted chat apps.

- Remediation Context: Provides links to patches, workarounds, and vendor-specific solutions.

Why is Flashpoint’s non-CVE intelligence vital for asset protection?

Flashpoint’s non-CVE intelligence is vital for asset protection because nearly one-third of all known vulnerabilities are never assigned a CVE ID. Traditional scanners and public databases like the NVD often miss these flaws, leaving your assets exposed to “hidden” risks. Flashpoint VulnDB tracks over 100,000 non-CVE vulnerabilities, ensuring that your security program has visibility into the full spectrum of risk across your entire software supply chain.

| Database Source | Vulnerability Coverage Level |

| Public NVD/CVE | Misses over 30% of disclosed vulnerabilities and third-party flaws. |

| Flashpoint VulnDB | Tracks 340,000+ entries, including zero-days and niche software. |

| Speed to Awareness | Flashpoint is consistently 14+ days faster than public notification systems. |