Promise and peril: AI threats, regulation, and oversight

Artificial Intelligence (AI) has taken the world by storm. From healthcare to finance, transportation to entertainment, the integration of AI into our daily lives is nearly ubiquitous.

However, despite AI’s capability for good, the world is already seeing the potential issues that AI introduces—with copyright infringement, data privacy, and misinformation only scratching the surface—in addition to its growing contribution to the digital threat landscape. Given the rapid growth of AI and the AI marketplace, world governments have been under intense pressure to provide guidance.

Recently, US President Biden recently issued an Executive Order that aims to bolster safety and security, protect privacy, ensure fairness, safeguard consumers and workers, foster a robust competitive ecosystem, and establish international and governmental standards for AI use.

Here’s how Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence aims to address the “extraordinary promise and peril” of AI:

- Improve overall AI safety and security

- Enhance privacy protections for Americans

- Prevent algorithmic discriminations or biases

- Increase protections for consumers, patients, and students

- Prevent potential workforce abuse or disruptions caused by AI

- To maintain a competitive AI ecosystem

- To accelerate the creation of AI standards with international partners; and

- To issue standards for the government’s use of AI

AI threats: Exploitation by cyber threat actors

Flashpoint has observed threat actors and advanced persistent threats actively discussing and sharing techniques on illicit forums for integrating AI into their nefarious activities, including the development of new tools and educating peers on exploiting this technology.

Flashpoint is seeing AI being leveraged in the following ways:

- Simplifying low-level cyberattacks and assisting in social engineering

- Spreading propaganda and misinformation via deep fakes and other methods

- Generating malicious code and malware samples

- Surveillance and reconnaissance

AI as a force multiplier for low-level attacks

AI tools still pose a major risk to organizations as they significantly reduce the barriers of entry for lower-level attacks. Basic hacking tasks are made easier, allowing threat actors to easily scan for open ports, deploy virtual machines, or to craft phishing emails en masse.

AI is still in its early phase, and as such, the sophistication of current AI code generated attacks is low. AI algorithms have not yet been observed to generate any new, or previously-unseen code. Currently, the code that is generated by AI tools tends to be basic or bug ridden, and is unlikely to compromise any organization employing a proper vulnerability management program.

1. Social engineering

Arguably, the current most pressing threat that AI introduces is improved social engineering capabilities. Phishing has always been and continues to be a primary attack vector for ransomware and other cyber threats.

Although AI tools may not be able to produce complex malicious code, AI language models have made significant improvements since introduction. Not only do AI chatbots allow non-English speaking threat actors to create well-written messages, GPT-4 allows users to mimic perceived levels of sophistication—being able to produce lengthy responses on nearly any subject, while also replicating the prose of well-known authors or celebrities.

This capability can prove even more effective when paired with writing samples obtained from data breaches. Using AI tools like ChatGPT, threat actors could potentially use compromised emails to assist in spear-phishing or whaling attacks by having AI models replicate a trusted internal employee’s or contact’s writing style.

2. Propaganda and misinformation

Deep fakes continue to be a growing and consistent tool for threat actors. By generating and abusing video or images, threat actors have targeted individuals for smear campaigns or have used it to spread disinformation or to promote scams. In recent years, the number of targeted celebrities has increased—with individuals such as influencer Mr. Beast, Elon Musk, and others having their likeness being used to scam the unsuspecting public.

The increasing accessibility of AI text, audio, and video tools will likely lead to disinformation campaigns being easier to produce.

3. Generating malware and malicious code

As previously stated, malware and other malicious code generated by AI tools is currently basic. However, these codes and scripts can still be used in cyberattacks. On the darknet, some threat actors have claimed to have used AI to write information-stealer malware. Our analysts have not yet observed any reports of novel attacks that leveraged code generated by AI text tools. This attack vector potentially can worsen as AI improves.

4. Surveillance and reconnaissance

AI image technologies can also have impacts on physical security, where threat actors can use AI to interpret images or videos for reconnaissance purposes. For example, the technology can be used to automatically identify unique objects, locations, or individuals for intelligence gathering or surveillance. Both malicious actors and researchers can use object character recognition (OCR) software to extract text from images to assist in their attacks or investigations.

Top five most common AI brands used by threat actors

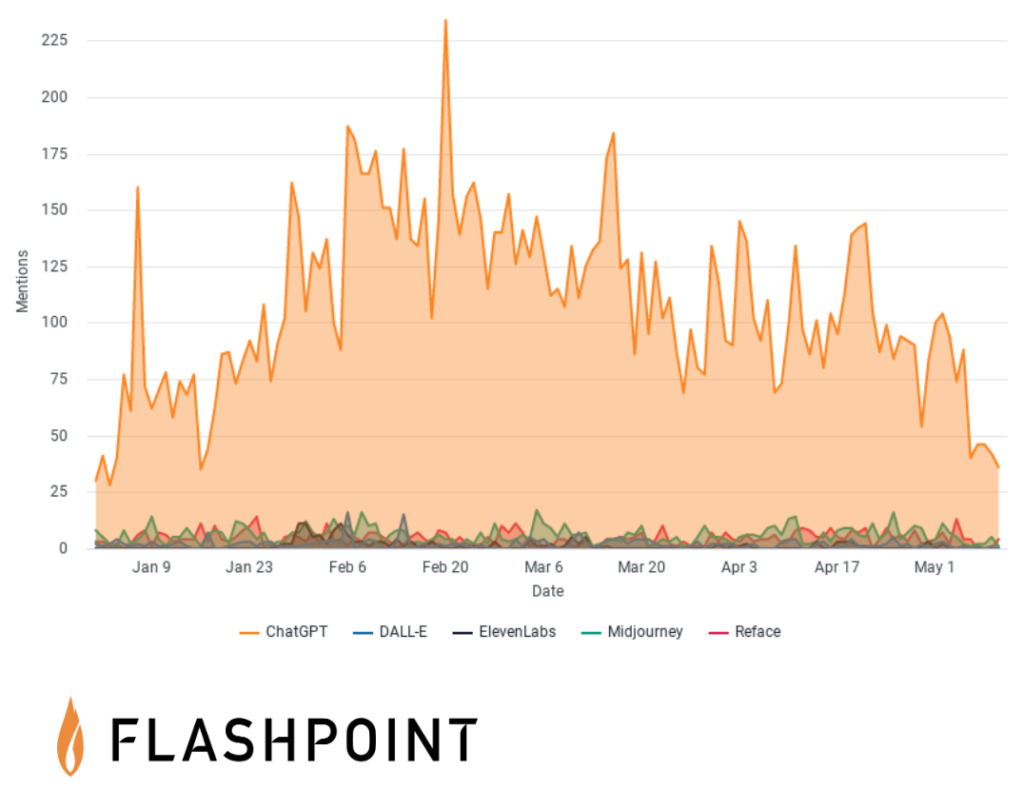

Threat actors are using the same AI brands as the general public and are manipulating them via well-engineered prompts or “do anything now” (DAN) roleplay–where they convince AI to ignore their programmed restrictions. Here are top five most common AI brands used by threat actors are:

- ChatGPT

- DALL-E

- ElevenLabs

- Midjourney

- Reface

ChatGPT has more mentions than all of the other brands combined. Analysts assess this is highly likely due to its broader media attention, the diverse use cases of the software, and that it is free to use.

The rise of custom-built malicious AI models

OpenAI and other AI companies have taken steps to reduce the effectiveness of DAN and malicious prompts. This has led to the development of AI models that are specifically designed for malicious use. These models eliminate any ethical or legal limitations, are trained with illicit content—thereby having no issues generating illegal, violent, or sexually explicit content. The two most well-known threat actors developed models being WormGPT and FraudGPT.

WormGPT

WormGPT is a malicious artificial intelligence chatbot that imitates ChatGPT. Unlike ChatGPT, WormGPT willingly provides malicious code or text that is specifically meant to be used in phishing attacks.

Monitoring our collections, Flashpoint captured over 500 mentions of WormGPT originating from various illicit online communities from June to July of 2023. Among those discussions were user reviews, how to purchase the tool, and troubleshooting. The majority of chatter was in English, however discussions in other languages such as Arabic, Chinese, and Russian took place.

FraudGPT

FraudGPT is a malicious Telegram-based chatbot that operates similarly to ChatGPT and WormGPT. Like WormGPT, this tool is willing to provide malicious code. Flashpoint analysts procured access to the software and found that the model does not appear to answer questions about phishing or DDoS attacks. It also declined to answer questions involving theft, such as bank robbery.

Take action against AI threats with Flashpoint expertise

Take decisive action against the evolving AI-driven threat landscape. Flashpoint delivers the intelligence and tools necessary to understand and counteract AI-powered cyber threats. To learn how to apply artificial intelligence (AI) strategically using Flashpoint’s approach that is grounded in analyst workflows and customer outcomes, download AI and Threat Intelligence: A Defenders’ Guide.